Hi All,

Since we will be doing a lot of training on the use of the CDM in the upcoming years and many more people across the world will be running/inventing queries I think an easy accessible and manageable CDM query library is of great value.

Thanks to @mvanzandt, Michael Wichers and others we now have all the old V4 queries from the OMOP times ( http://cdmqueries.omop.org/) in a V5 in Github: https://github.com/OHDSI/OMOP-Queries. This is a good start, but i think it is not yet very accessible for all our stakeholders.

What would be ideal I think is the following:

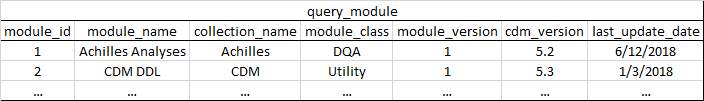

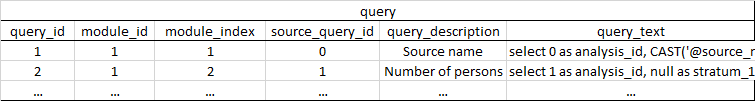

We have the nice ShinyApp that Martijn made to render SQL: http://data.ohdsi.org/SqlDeveloper/. This could be easily extended with a SQL library per domain. What would be nice is if we could standardise the queries in for example JSON, so we have all the extra information such ID, Description, Example, Field description, Output description in a format the R code could process. The app will automatically load all the json files. Also I suggest to parameterise the SQL so people could add the CDM schema in the App (Martijn already implemented that these automatically will generate an input box).

We could make a dropdown to select the domain and this creates a searchable list of queries (possibly with a hoover over effect to see the description text). If the user clicks on the query it is added in the SQL box and rendered according to the settings. Below the SQL boxes we could print the explanation of the Query.

The current app already allows you to copy paste or download the query.

I noticed in many meetings I have with potential and current users that building queries is not easy and requires a very deep understanding of the Vocabularies. Sometimes we build an advanced query upon their request but the rest of the community is not aware of it. I think a ShinyApp that runs on the web and is linked from ohdsi.org could help to avoid errors in query building. We could allow for request through the issue section of the tool. Some one needs to be responsible for the quality of the queries of course.

Anyone who likes to work on this? Other thoughts?

Peter