Thanks @ericaVoss for starting this post. This seems to be a regular discussion for newcomers in the community, or those who buy into the OHDSI premise but don’t know how to convince their management to gain support to implement a solution.

I agree with all of your points, well stated. My additional two cents:

If your organization has a vested interest in learning from observational data by generating and using evidence to inform decision-making processes, then you want that evidence generation and dissemination process to be:

- higher quality

- more consistent

- faster speed

- lower cost

- greater throughput

- larger impact

The upfront investment to adopt the OMOP common data model as your approach to standardized the data, and to learn the OHDSI toolkit as your approach to standardize analytics, can enable all of these characteristics.

Quality: the process of data standardization to the OMOP CDM forces greater understanding of the source data, and explicit decision-making about how to reconcile poor quality data. But by imposing a data quality process upfront in the management of the data, you reduce the burden on the analyst and reduce the risk that the ‘known’ data quality issues are overlooked. As @jon_duke presented at the EMA’s meeting on ‘A common data model in Europe? Why? Which? How?’ last year, OHDSI is trying to approach quality holistically, supporting four dimensions: data validation, software validation, methods validation, clinical validation. Developing a quality system requires that you first recognize that you need to develop a system - with defined inputs and defined outputs, and then you can develop practices and processes to ensure that system achieves the desired objectives. Our objective is generating reliable evidence that improves health by promoting better health decisions and better care, and OMOP CDM and OHDSI tools aim to facilitate that objective.

Consistency: A major challenge facing observational research is that the journey from source data to evidence is an arduous one, with many steps along the way which can make it challenging to retrace your path. Data standards, using the OMOP common data model, helps to provide a consistent data structure (tables, fields, data types), consistent conventions for governing how the data are represented (which is getting better and better thanks to our THEMIS workgroup), and consistent content through the use of standardized vocabularies. Standardized analytics allow for consistency in cohort definition, covariate construction, analysis design, and results reporting. Essentially, using the OMOP CDM and OHDSI tools allows for observational research to be applied by many following a systematic process that codifies agreed scientific best practice, rather than research being the task of one individual who devises their own an ad hoc one-off bespoke approach.

Speed: As @krfeeney showed in her Lightning Talk at the OHDSI Symposium, many organizations benchmark the time it takes to go from original question to final answer in many months or even years. But as @jennareps and the team fully demonstrated at the same Symposium, if you use the OMOP CDM and the OHDSI tools, it is possible to take an important clinical question in the morning and produce a meaningful insight (externally validated around the world) by the same afternoon. Often, more time may be needed to make sure you’re asking the right question or ensuring your exposure/outcome definitions are what you want. But if you adopt the OHDSI framework, then there is a clear path to produce a fully-specified design for an analysis, whether it be clinical characterization, population-level effect estimation, or patient-level prediction. And with a fully-specific design in hand, the technical work of implementing and executing the analysis against your data (and extending to under across a distributed network of disparate observational databases) is well-defined and has been highly optimized for efficiency. If your feedback loop for asking and receiving quality evidence was measure in minutes instead of months, just think about how that may transform the way you integrate data into your decision-making processes.

Cost: It is indeed true that adopting the OMOP CDM and OHDSI tools requires a significant upfront investment. But the reality is that, if your organization seeks to generate evidence from observational data on a regular basis, then ANY strategy to do that well will require a significant investment, in terms of financial resources for data licensing and technical infrastructure as well as personnel to design and implement studies. Adopting OHDSI isn’t necessarily an incremental resource above and beyond your existing observational research capability, but rather its a strategy for how to utilize the existing resources that you’ve got to support observational research. There is a resource tradeoff to consider when deciding your observational research infrastructure: given that any effort will require resources for data management and resources for data analysis, then different strategies will vary which resources are required more. A ‘common protocol’ approach requires much fewer resources for data management, but requires substantially greater resources for data analysis. The ‘common data model’ approach tries to balance resources between management and analysis, and relative to other CDM-based communities, OHDSI tends to lean toward imposing greater effort in data standardization to enable greater ease-of-use and greater scalability at analysis time. The other cost consideration is ‘buy vs. build’, and here, I think the argument for OHDSI is quite compelling: I don’t believe any one organization has the required technical and scientific capability to build themselves an analytics infrastructure that covers the depth and breadth of features that are available across the OHDSI ecosystem. And I know that none of the vendors providing commercial offerings can compete with the cost of OHDSI’s open-source solution, because you can’t beat ‘free’.

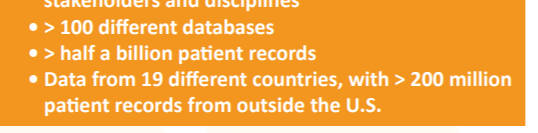

Throughput: One specific focus in the OHDSI ecosystem has been to support ‘evidence at scale’. One dimension of scale is facilitating analysis across networks of databases, which is directly enabled by the use of the OMOP common data model. Analysis code written for one database can be re-used for another data source when both databases adhere to the same CDM standard. The application of one analysis procedure against multiple databases is useful if you are working within your own local institution which hold multiple databases, or whether you want to collaborate across multiple institutions as part of an OHDSI network study. The other dimension of scale is designing analysis code that allows for multiple instances of the same type of question to be executed concurrently. If you want to estimate the incidence rate of one outcome within one target population, you can do that. But following the same framework, you can simultaneously estimate incidence rates for many outcomes across multiple target populations. If you want to perform a population-level effect estimation study using a propensity score-adjusted new user cohort design, you can do that for one target-comparator-outcome, but you can also use the same tool to allow for empirical calibration for the target-comparator pair using a large sample of negative control outcomes…our you can study multiple outcomes of interest…or make multiple comparisons using different target cohorts and different comparator cohorts. A key principle within LEGEND is the consistent application of a best practice approach to all clinically relevant questions of interest, and the OHDSI tools enable this behavior in a computationally-efficient manner. A similar paradigm was followed in the development of the Patient-Level Prediction package, allowing for multiple machine learning algorithms to be applied to multiple target populations and multiple outcomes, and allowing for learned models to be externally validated across multiple data sources. ‘Evidence at scale’, when applied across the set of questions that matter to your organization, represents a tremendous opportunity to drastically increase throughput from the sequential one-at-a-time approach that are generally followed without such tools.

Impact: Historically, observational research has been received with great skepticism, and with some good reason: the traditional paradigm of one researcher applying one analysis method to one database to address one question about one effect of one exposure on one outcome to (if the results seemed noteworthy enough) publish one finding has resulted in a corpus of observational literature that demonstrates substantial publication bias and p-hacking, and considerable susceptibility to systematic error due to confounding, measurement error and selection bias. Adopting the OMOP common data model and OHDSI toolkit offers the opportunity to chart a different course: to apply empirically-demonstrated scientific best practices to generate evidence and simultaneous produce an empirical evaluation of the quality of the evidence you’ve generated to prove to yourself and to others the degree of reliability and utility for meaningfully informing decision-making. Adoption also enables being part of a collective effort across an international network of researchers and data partners to improve health by empowering a community to collaboratively generate the evidence that promotes better health decisions and better care.

Perhaps the more difficult question to answer is: why WOULDN’T you join the journey in adopting the OMOP CDM and OHDSI analytics?

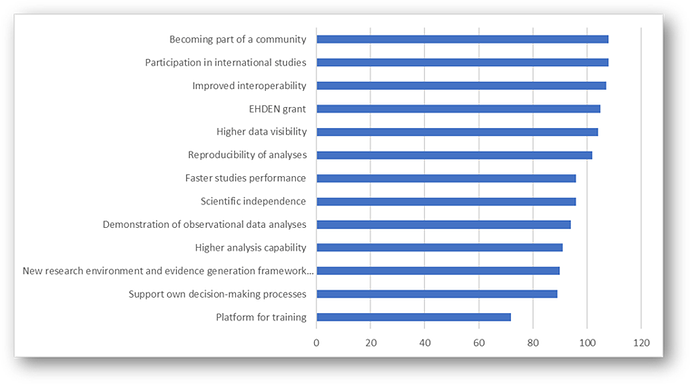

etc.), and enabled by the OMOP CDM, the OHDSI community realizes literally ALL of those benefits!

etc.), and enabled by the OMOP CDM, the OHDSI community realizes literally ALL of those benefits!