Hi all! My name is Nathaniel and I’m part of a team that is new to the OHDSI / HADES community. We’re so excited to learn more from the community and hopefully start contributing oursevlves soon.

First off my apologies for the long post before getting to my core questions, but I think the context will be useful. For those willing to read our story and respond, I would be grateful for your feedback!

Goal

Our team conducts epidemiological research from RWD, and builds tools to help make that process easier.

We are trying to understand how we can leverage HADES for OMOP data in an R based workflow in order to reduce the amount of code users need to write to create and analyze cohorts from OMOP.

Background

Typically, we write analytical methods ‘from scratch’, combination of SQL and R, for each project.

Procedurally, our research typically follows a patient-level framework, whereby we iteratively create a “one-row-per-patient” patient-level table, with summarised variables, filtered down to patients who satisfy inclusion criteria.

Those summarized variables can be things like

- (date) First date of a diagnosis of

- (logical) Did the patient ever receive drug after

- (character) What state is the patient from?

- (numeric) How many inpatient visits did the patient have within days around event

- (logical) Did the patient have at least 2 inpatient visits within a window of days around event

Each of these patient-level variables can require different methods to derive

Some are simple and can be read directly from an existing patient level table:

- (character) Patient sex

- (date) Patients date of death

Others are summaries that some amounts of computation, but are easy to calculate:

- (integer) Total number of inpatient visits within a specific <date_window>

- (date) Date of patient’s earliest treatment of

Others are the results of complex algorithms that require multiple parameters:

- (character) What is a patient’s biomarker status for a biomarker <biomarker> at <date>, where biomarker status is defined as a summary across multiple potential biomarker values within a specific <date_window>

**What we (believe we) know **

Based on my readings of the (fantastic) OHDSI documentation, we understand the following

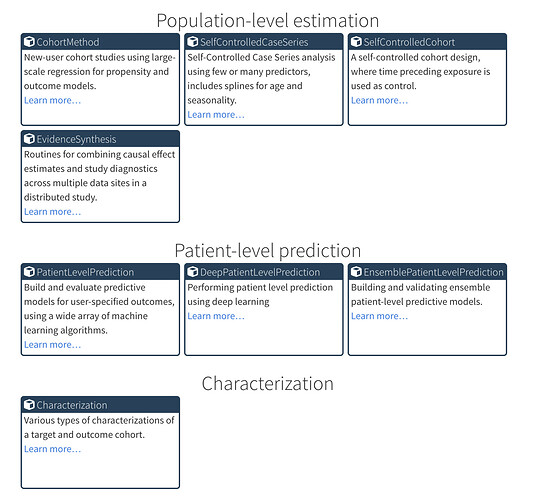

- The HADES packages provide a menu of R based functionality for many steps in the cohort creation and analysis process.

- The most relevant packages for those for constructing and evaluating cohorts, such as Capr, PhenotypeLibrary and CohortGenerator

- Guidelines for designing a simple study can be found at (Chapter 9 SQL and R | The Book of OHDSI)

- Community developed queries that implement common analyses and patient phenotypes are stored in locations such as the QueryLibrary https://github.com/OHDSI/QueryLibrary and the PhenotypeLibrary package

Questions

Here are some of my high level questions I’d love feedback on!

-

What are some recommended ‘end-to-end’ workflows for defining patient-level variables, where some variables require potentially and analyzing complex cohorts, with highly custom patient-level summarized variables?

- Is capr the state of the art? Or are there other approaches?

- Is there collection of “gold standard” recommended end to end implementations of different kinds of study designs?

-

How often should custom SQL needed to implement patient level variables?

- Is this commonly expected or can it be avoided through HADES packages such as capr?

-

How should community developed queries be embedded in analysis scripts?

- Do they need to be copied and pasted, or can they be called programmatically through functions (i.e.; through wrapper functions that find, edit, and embed the query)

- Is there functionality in HADES (or somewhere else) to access and customize these queries through functions?

-

What is the relationship between ATLAS and HADES?

- Does ATLAS use HADES under the hood? Or are they completely independent code-bases?