I’m running executeDiagnostics following the instructions in the SOS video and associated slides.

The code below is giving the error shown.

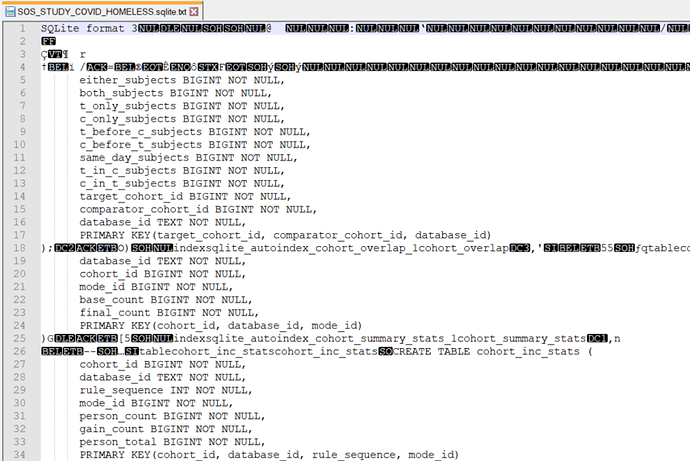

The error seems to be related to a table called “index_event_breakdown”. I’m not sure where this table is supposed to exist. I’m not seeing it in my CDM instance or represented by any of the output files.

The full script, output, error log, and output dir (as zip file renamed to .txt) are attached.

01-phenotype-evaluation.R.txt (3.3 KB)

ouput.txt (9.7 KB)

errorReportR.txt (1.4 KB)

output.zip.txt (25.2 KB)

Any thoughts @anthonysena, @jpegilbert?

Code and Error:

> library(CohortDiagnostics)

>

> executeDiagnostics(

+ cohortDefinitionSet = cohortDefinitionSet,

+ connectionDetails = connectionDetails,

+ cdmDatabaseSchema = cdmDatabaseSchema,

+ cohortDatabaseSchema = cohortDatabaseSchema,

+ cohortTableNames = cohortTableNames,

+ exportFolder = file.path(dataFolder,databaseId),

+ databaseId = databaseId,

+ incremental = TRUE,

+ incrementalFolder = incrementalFolder,

+ minCellCount = 5,

+ runInclusionStatistics = TRUE,

+ runIncludedSourceConcepts = TRUE,

+ runOrphanConcepts = TRUE,

+ runTimeSeries = TRUE,

+ runVisitContext = TRUE,

+ runBreakdownIndexEvents = TRUE,

+ runIncidenceRate = TRUE,

+ runCohortRelationship = TRUE,

+ runTemporalCohortCharacterization = TRUE

+ )

Run Cohort Diagnostics started at 2023-08-10 15:52:36.41267

- Databasename was not provided. Using CDM source table

- Databasedescription was not provided. Using CDM source table

Created folder at D:\_YES_2023-05-28\workspace\SosExamples\_COVID\04-phenotype-evaluation\output\demo_db\demo_cdm

The following fields found in the cohortDefinitionSet will be exported in JSON format as part of metadata field of cohort table:

atlasId,

generateStats,

logicDescription

- Unexpected fields found in table cohort - atlasId, logicDescription, generateStats. These fields will be ignored.

Connecting using Spark JDBC driver

Saving database metadata

Saving database metadata took 0.0716 secs

Counting cohort records and subjects

Counting cohorts took 0.38 secs

- Censoring 0 values (0%) from cohortEntries because value below minimum

- Censoring 0 values (0%) from cohortSubjects because value below minimum

Found 0 of 2 (0.00%) submitted cohorts instantiated. Beginning cohort diagnostics for instantiated cohorts.

Fetching inclusion statistics from files

Exporting cohort concept sets to csv

- Unexpected fields found in table concept_sets - databaseId. These fields will be ignored.

Starting concept set diagnostics

Instantiating concept sets

|=====================================================================================================================| 100%

Creating internal concept counts table

|=====================================================================================================================| 100%

Executing SQL took 5.55 secs

Fetching included source concepts

|=====================================================================================================================| 100%

Executing SQL took 5.69 secs

Finding source codes took 9.94 secs

Breaking down index events

- Breaking down index events for cohort 'Not Homeless (Draft 1)'

- Breaking down index events for cohort 'Homeless (Draft 1)'

An error report has been created at D:\_YES_2023-05-28\workspace\SosExamples\_COVID\04-phenotype-evaluation\output\demo_db\demo_cdm/errorReportR.txt

Error in makeDataExportable(x = data, tableName = "index_event_breakdown", :

- Cannot find required field index_event_breakdown - conceptId, conceptCount, subjectCount, cohortId, domainField, domainTable.

In addition: Warning messages:

1: Unknown or uninitialised column: `isSubset`.

2: Unknown or uninitialised column: `isSubset`.

3: Unknown or uninitialised column: `isSubset`.

4: Unknown or uninitialised column: `isSubset`.

5: There were 2 warnings in `dplyr::summarise()`.

The first warning was:

ℹ In argument: `conceptCount = max(.data$conceptCount)`.

Caused by warning in `max()`:

! no non-missing arguments to max; returning -Inf

ℹ Run dplyr::last_dplyr_warnings() to see the 1 remaining warning.

6: Unknown or uninitialised column: `isSubset`.

7: In eval(expr) :

No primary event criteria concept sets found for cohort id: 4

8: Unknown or uninitialised column: `isSubset`.

9: In eval(expr) :

No primary event criteria concept sets found for cohort id: 5

Error in exists(cacheKey, where = .rs.WorkingDataEnv, inherits = FALSE) :

invalid first argument

Error in assign(cacheKey, frame, .rs.CachedDataEnv) :

attempt to use zero-length variable name

>