I’ve typed up my notes and created a How-to page for setting up Broadsea with Databricks. This is currently a DRAFT version. Let me know what you think.

https://ohdsi.github.io/DatabaseOnSpark/developer-how-tos_broadsea.html

— EDIT -----------------------

Adding this as an edit as the forum software won’t let me do 3 or more replies in a row

— EDIT -----------------------

Well, It looks like I’m not out of the woods yet…

I’ve run Achilles and put the results in demo_cdm_ach_res. However, when I launch Atlas, navigate to the “Data Sources” page, and select a report, I get the “Loading Report” gif and everything seems to stop there. There doesn’t seem to be any activity in the broadsea or webapi logs in Docker.

I’m thinking this line at the end of the broadsea log in Docker might be a clue, but I’m not sure what it means:

Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.0.0 Safari/537.36" “172.23.0.1”

2023-05-16 15:09:55 ohdsi-atlas | 2023/05/16 19:09:55 [error] 9#9: *1 “/usr/share/nginx/html/atlas/js/config-gis.js/index.html” is not found (2: No such file or directory), client: 172.23.0.6, server: , request: “GET /atlas/js/config-gis.js/ HTTP/1.1”, host: “127.0.0.1”, referrer: “http://127.0.0.1/atlas/”

I’m wondering if this is due to the missing achilles_analysis file (see last screen shot below) described here (with workaround): https://forums.ohdsi.org/t/error-running-achilles-against-databricks/18575/3

Any help resolving this would be greatly appreciated.

Here’s my docker-config.yml file:

docker-compose.yml.txt (6.3 KB)

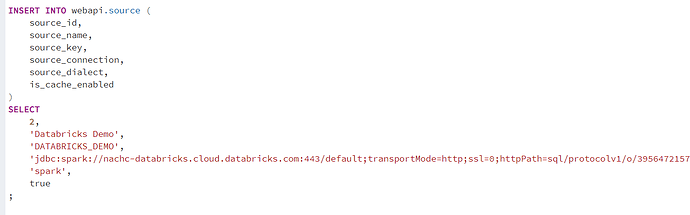

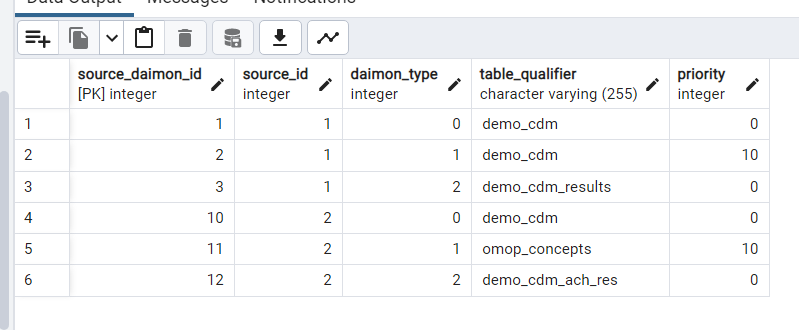

Here’s what my webapi records look like in PostgreSql:

SOURCE

SOURCE_DAIMON

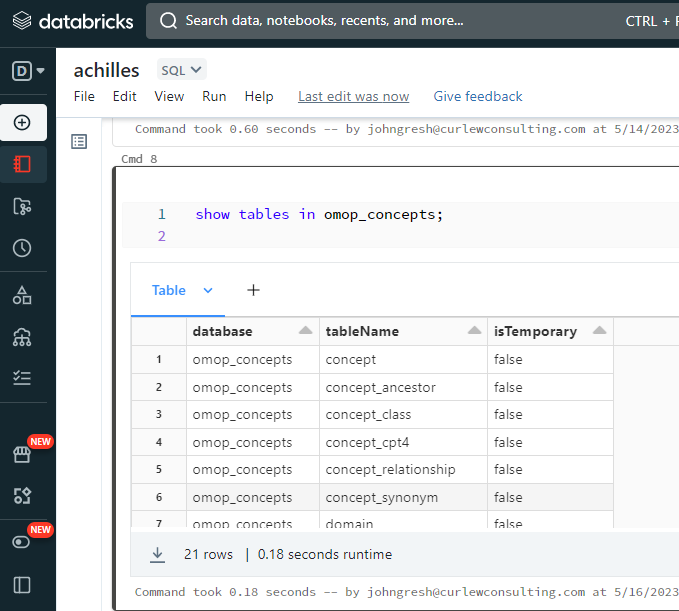

Here’s my vocabulary database in Databricks:

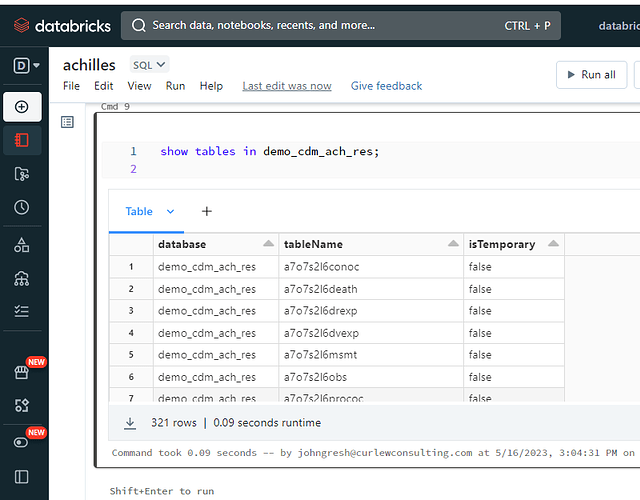

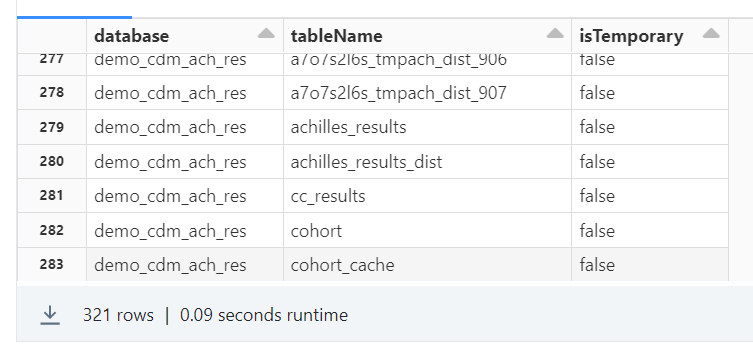

Here’s my Achilles results database in Databricks

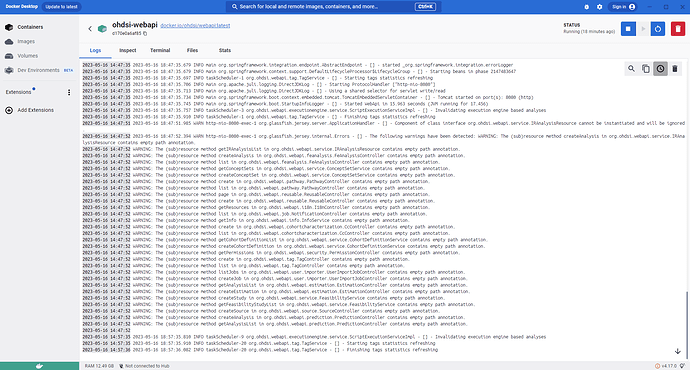

Here’s my webapi log in Docker:

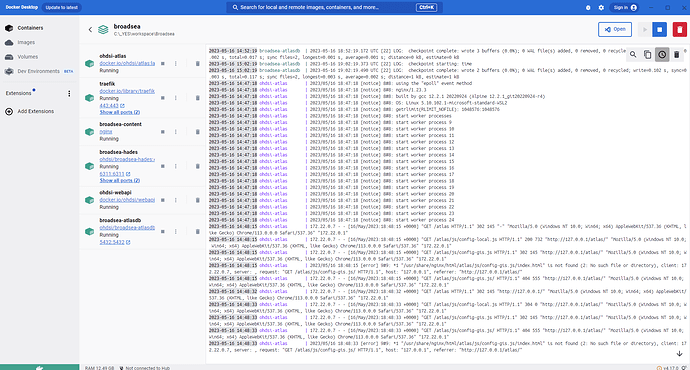

Here’s my broadsea log from Docker:

More tables in the demo_cdm_ach_res schema: