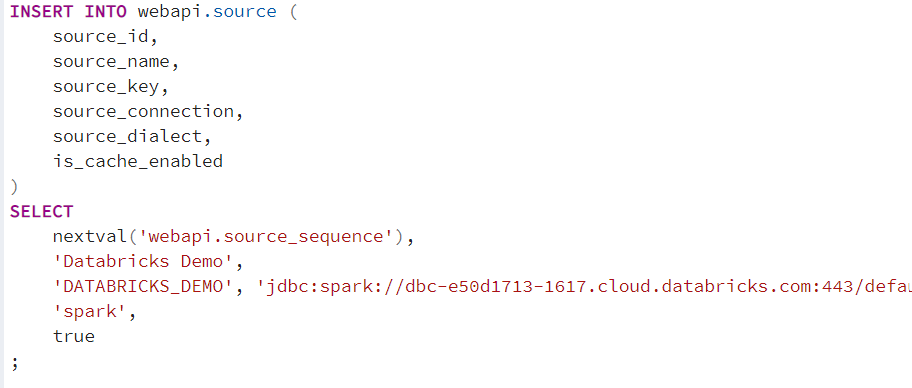

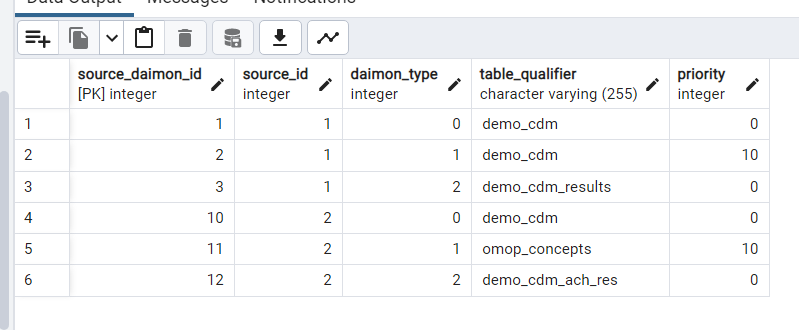

I am trying to do a Broadsea deployment that needs to connect to the CDM database in Databricks. I cloned Broadsea repo and then went through the steps to connect to the Postgrest webapi schema and run the source daimon script -

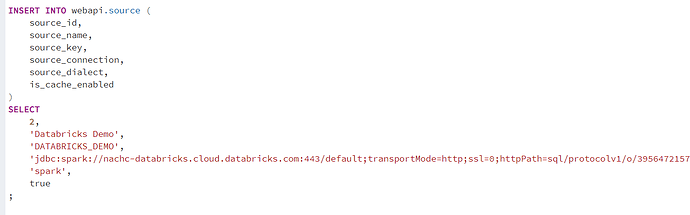

INSERT INTO webapi.source( source_id, source_name, source_key, source_connection, source_dialect, is_cache_enabled)

VALUES (7, 'ADB_OMOP_7', 'ADB_OMOP_7',

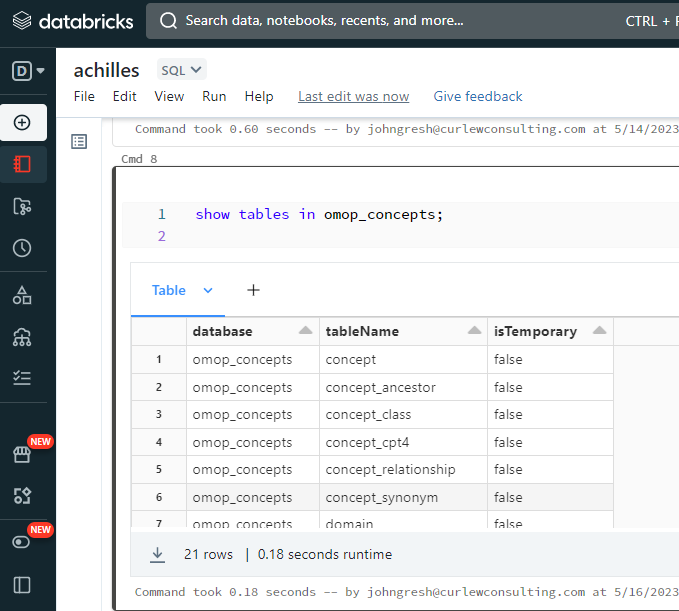

'jdbc:spark://<databricks_url>:443/default;transportMode=http;ssl=1;httpPath=<somePathHere>;AuthMech=3;UseNativeQuery=1;UID=token;PWD=<personalAccessToken>', 'spark', true);

-- CDM daimon

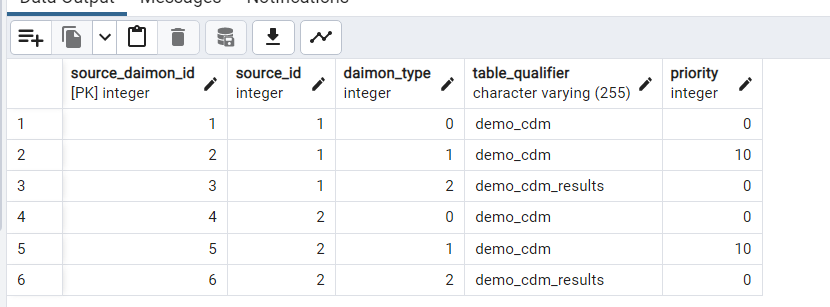

INSERT INTO webapi.source_daimon( source_daimon_id, source_id, daimon_type, table_qualifier, priority) VALUES (23, 7, 0, 'omopcdm_demo', 2);

-- VOCABULARY daimon

INSERT INTO webapi.source_daimon( source_daimon_id, source_id, daimon_type, table_qualifier, priority) VALUES (24, 7, 1, 'omopcdm_demo', 2);

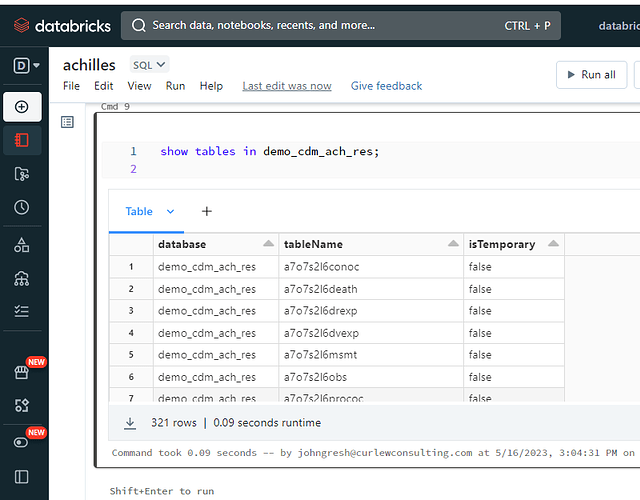

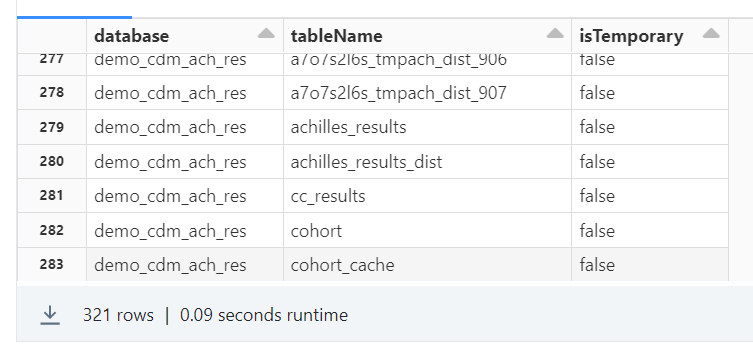

-- RESULTS daimon

INSERT INTO webapi.source_daimon( source_daimon_id, source_id, daimon_type, table_qualifier, priority) VALUES (25, 7, 2, 'omopcdm_demo', 2);

-- EVIDENCE daimon

INSERT INTO webapi.source_daimon( source_daimon_id, source_id, daimon_type, table_qualifier, priority) VALUES (26, 7, 3, 'omopcdm_demo', 2);

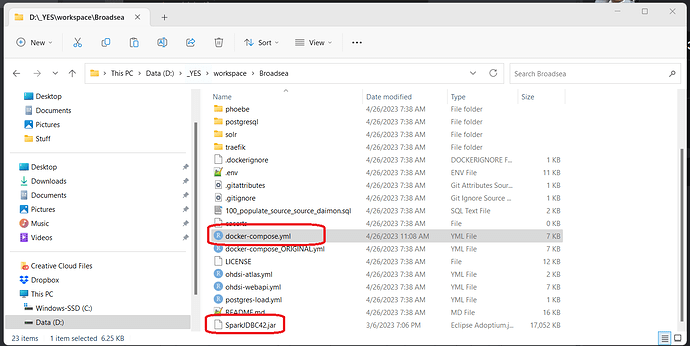

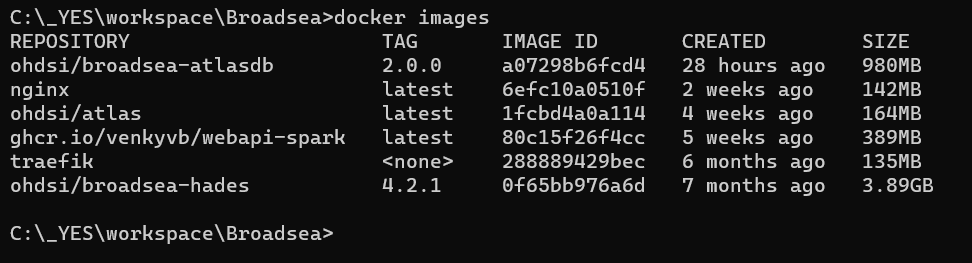

I download the Spark JDBC driver from Databricks and placed it at the main (root) directory of the cloned Broadsea repository (where the docker-compose.yml is located).

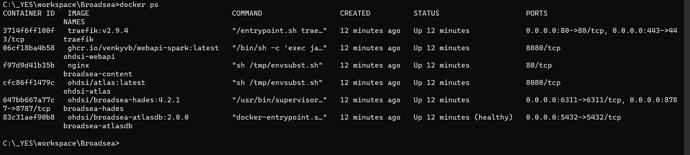

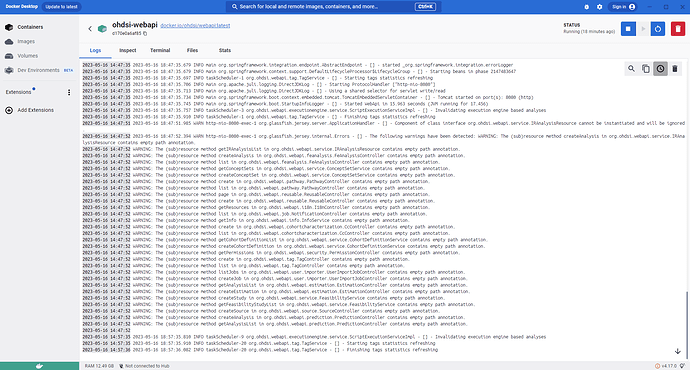

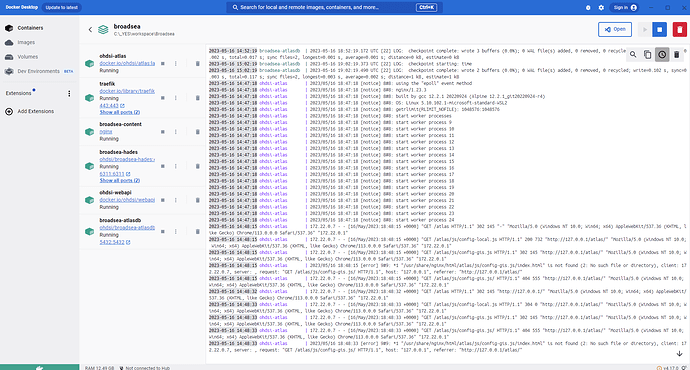

However when I run Broadsea and check the Docker logs for the ohdsi-webapi container I see the error -

http-nio-8080-exec-7 com.odysseusinc.logging.LoggingService - [] - Could not get JDBC Connection; nested exception is java.sql.SQLException: No suitable driver found for jdbc:spark:/...

When I check the logs I see that there is an error loading the spark JDBC driver -

...

uery.jdbc42.Driver driver. com.simba.googlebigquery.jdbc42.Driver

ohdsi-webapi | 2023-03-18 05:25:33.216 INFO localhost-startStop-1 org.ohdsi.webapi.DataAccessConfig - [] - error loading org.apache.hive.jdbc.HiveDriver driver. org.apache.hive.jdbc.HiveDriver

ohdsi-webapi | 2023-03-18 05:25:33.217 INFO localhost-startStop-1 org.ohdsi.webapi.DataAccessConfig - [] - error loading com.simba.spark.jdbc.Driver driver. com.simba.spark.jdbc.Driver

ohdsi-webapi | 2023-03-18 05:25:33.217 INFO localhost-startStop-1 org.ohdsi.webapi.DataAccessConfig - [] - error loading net.snowflake.client.jdbc.SnowflakeDriver driver. net.snowflake.client.jdbc.SnowflakeDriver

....

Any hints as to what am doing wrong here?