Hello OHDSI Community!

@Ajit_Londhe

Why might cohort generation show too few records for certain datasets even though the Dashboard reports are accurate?

We have two data sources set up in ATLAS:

- The initial dataset set up along with the Broadsea installation (ATLAS + WebAPI version 2.10.0), which is in the same database as the WebAPI schema.

- A newer version of source 1 that has been updated with additional records, which exists in a separate database.

When we create cohorts in ATLAS, source 1 displays the same or nearly the same number of records as a database query, but source 2 returns only about a fourth of the total records. The Dashboard report in “Data Sources” displays the correct number of records for both sources.

Example:

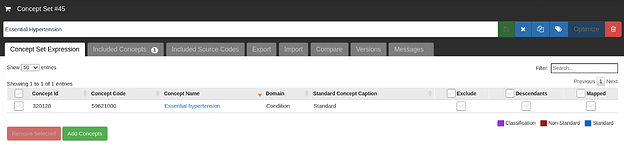

We created a concept set on ATLAS using the single concept, “Essential Hypertension” (Concept ID: 320128) and made a cohort with this condition occurrence as its initial entry event. We included all events, with no other inclusion criteria or definition changes from the default. We generated reports in the “Generation” tab and compared the record counts to a database query: select count(distinct person_id) from cdm.condition_occurrence where condition_concept_id = 320128. The number of records reported for source 1 matched the query, but not for source 2.