Hi there,

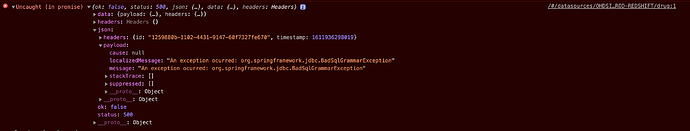

I am running into an issue with ACHILLES in ATLAS and could use some help diagnosing the root cause. The Condition Occurrence, Drug Exposure, and Procedure reports do not show up; instead I get an error message “Error loading report”. I’ve done some digging on the forums and don’t see an obvious solution right now.

Some info about my instance:

- OMOP CDM is in Redshift

- ATLAS & WebAPI v2.7.7

- CDM contains the MedDRA vocabulary

- concept_hierarchy table has been generated

- I ran ACHILLES in R and the achilles_results table has been populated, containing over 1 million rows

- I ran this SQL query - https://github.com/OHDSI/Achilles/blob/80e6ae9363dd76992c73360c61e3b6afb9a9e5df/inst/sql/sql_server/export/condition/sqlConditionTreemap.sql - and it returns ~10k rows

- I ran exportConditionToJson and it successfully generated the treemap file and a bunch of files in the conditions folder which are full of data. I was unable to get AchillesWeb to work, but that might be an unrelated issue

My theory right now is that this has to do with the fact that our condition_occurrence, procedure_occurrence, and drug_exposure tables contain events dated outside patients’ observation periods. Our measurement and visit_occurrence tables do not contain data outside the OP, and those 2 reports work fine in ATLAS. Based on other forum posts, it does not seem like this should prevent the reports from running, but maybe I’m wrong.

Finally - the RC/DRC counts in ATLAS are not populating for us. It seems that this feature is linked to ACHILLES so I wanted to cite that as a potentially related issue.

Thanks in advance for any help!

Katy