We used Odysseus to create our initial OHDSI instance, which used SQL Server. When we shifted to using DataBricks, we didn’t have to change anything in the OHDSI stack. So, the standard install is likely to work OK.

We kept all of the WebAPI calls using PostGres, both because it has great performance, and because the queries aren’t certified for Databricks.

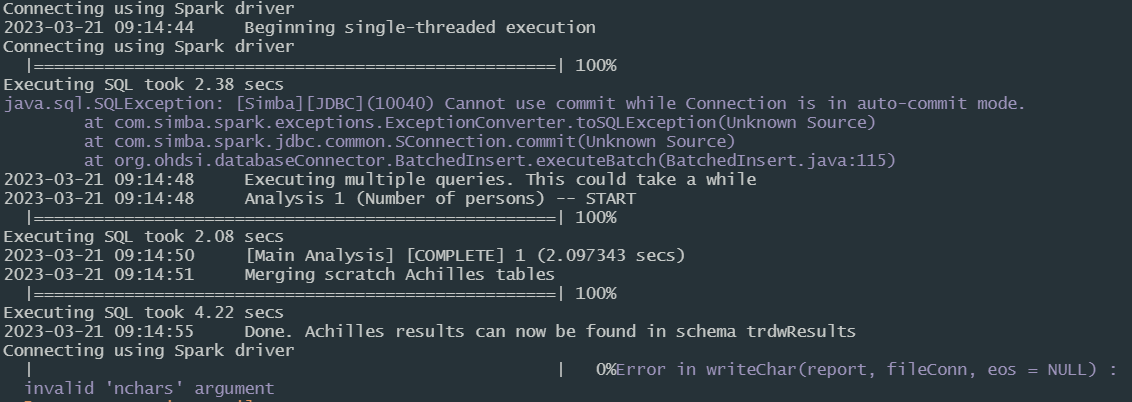

For the last 9 months, we’ve been using Databricks to do all of our OMOP data pipelining & ETL - from creating schemas & tables through populating the OMOP instances, plus running all of the QA. So, rather than using R to run Achilles or DQD directly, we used R to generate Spark SQL, then run that SQL directly in our ETL pipeline. This has improved performance and made it easier to monitor, start and resume if anything goes wrong. This also let us easily customize what we needed (e.g. adding data lineage tracking + local columns to certain OMOP tables, plus tweaking or commenting out sections of Achilles code that we didn’t need or which were inefficient) - while maintaining all of the packaged ETL in our git repo to facilitate distributed development, testing, and release management.

As a last thought, I recommend using a DataBricks SQL Warehouse instance (rather that a shared compute cluster) to support your instance. The Warehouse approach has better caching, logging, and throughput than a data engineering compute cluster.

Although Databricks gives use much better performance on standard Atlas analyses (e.g. cohort generation, characterization, incidence rates, etc.), we haven’t gotten to the point that we can use it for GUI navigation (e.g. clicking searching for concepts and clicking through hierarchy and relationships). So, we have a separate vocabulary_only data source connected to SQL Server to get the desired GUI performance. A benchmarks, when we use SQL Server to do vocabulary searches, the usual response time is about 3 seconds per click. Using a DataBricks SQL Warehouse, it is about 6-8 seconds per click (hierarchy or relationships). Using a non-warehouse compute cluster, it is about 25-30 seconds per click. My hope is that with better table or query optimization, we can get comparable performance on GUI navigation using a DataBricks SQL Warehouse.

Theoretically, Serverless Warehouses will provide even better throughput, so that may be worth exploring to ensure that the GUI has adequate performance.