Greg, I like this approach but it’s missing a bit of an appreciation for reconciling the minutia of the current paradigm to run a study versus the newer OHDSI paradigm. We’re assuming that all end users are capable of mapping a question to our preferred input. I would argue that we may get there but that’s not where we are today.

To be honest, we have no real way, at the moment, to adjudicate a study question in a communal fashion without an extensive amount of hot potato JSON sharing. For instance, as we’ve been updating Ts and Os from the Live PLP exercise we ran at the US Symposium, there’s been no clean way to share definitions – we all use different instances of ATLAS and importing JSON definitions can be a real pain for the novice user. (Yes, I concede it’s trainable… but let’s admit, there’s a learning curve here even for the savvy.) We’ve had to set-up calls to align verbally and then someone takes the mission to update the T or O depending on what’s agreed.

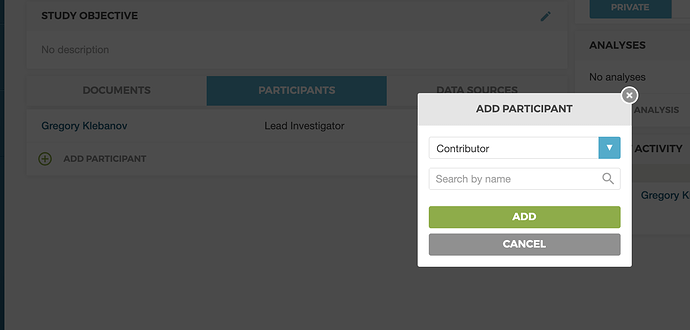

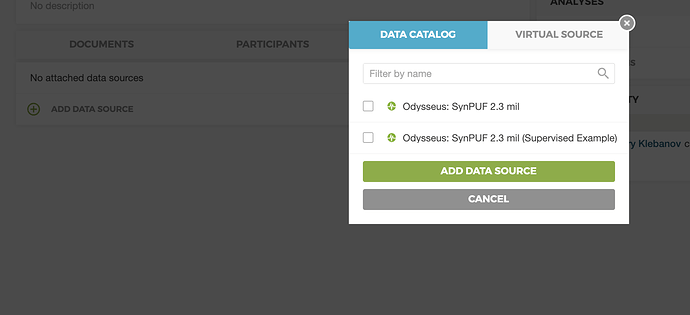

I get that ARACHNE is intended as a communal workspace but in the absence of this being available across sites (which I’m hopeful will be a future state), we need to think broadly about a more sustainable – non-technology way, to make it easier to share the human piece of research, namely a priori assumptions. We lose new investigators when we complicate it with needing to know every detail of how a concept set works to build a cohort. I’d challenge we need to be able to help an investigator translate their ideal cohort with a few guided workflows.

Study questions die when we make it too hard. I’ve lived through two network studies this year and I still feel there’s a huge gap in how we get out of the gate. Even with the best preparation, we still have logistical constraints. I also believe a study question cannot be robust enough for a network analysis without the ability to characterize the question in multiple databases and making sure you understand what (if any) differences exist.

And sadly, there’s no R package if we can’t agree on who belongs in the T, C, and O.

So, perhaps I am a Grinch but I’d love to come back to Christian’s astute commentary on focusing on a technical specific solution:

Technology is important… but we also need a social change on how we think about iterating and how we adjudicate our inputs in a way we feel comfortable. Today, we are spending far too much time iterating in our respective silos and sharing JSONs isn’t expediting this.

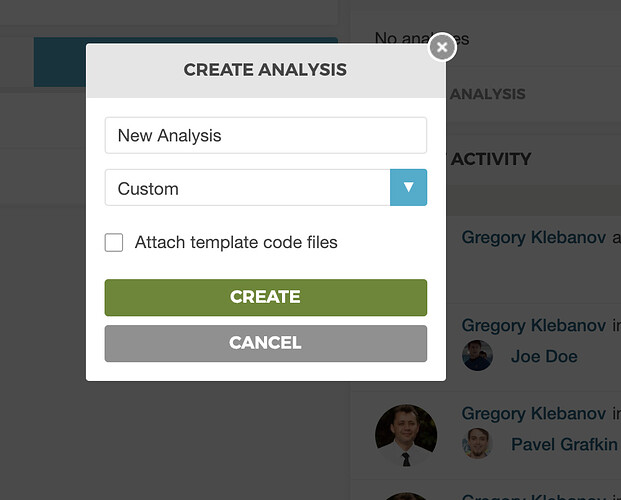

) features in there, but I have no doubt that it can be improved and made better. As a first step, maybe we pick an example study and test drive it through this platform all together?

) features in there, but I have no doubt that it can be improved and made better. As a first step, maybe we pick an example study and test drive it through this platform all together?

)

)

. Of course I agree to push for this as much as possible. However, the fact that you make a change to code does not necessarily mean you are not transparent or reproducible, you have to make sure you are.

. Of course I agree to push for this as much as possible. However, the fact that you make a change to code does not necessarily mean you are not transparent or reproducible, you have to make sure you are.