In yesterdays community call, @Christian_Reich made an interesting comment that we don’t have a standard table 1. To be clear what he meant, table 1 in pretty much every epi paper is a description of the population included in the study. Some examples are this table, this table, and this table (except here it’s table 2). My question: Would it be possible to create a standard table 1 for OHDSI studies?

But first, I’d like to discuss the purpose of such a table. I have a feeling peope are conflating the following purposes:

- To characterize the population so we can understand when our results are valid, and to what extent we may generalize to people outside of the study population

- To characterize how the target and comparator population differ (two of the examples referenced above show this, but one before and one after adjusting for confounding).

We already have tools for characterizing a population (in ATLAS) or for characterizing balance before and after an adjustment strategy (in CohortMethod), but the problem with those is that they include everything, so tens of thousands of variables, which would require a very small font to fit in that six-page limit for our paper. So the question is which variables to include. In the past, we’ve left it to experts to pick these variables, because experts fully understand the real world and which variables are effect modifiers (and therefore relevant for generalizability) and which variables are confounders (and therefore relevant for covariate balance). However, maybe in OHDSI we would like something more data-driven.

Here are some options for an OHDSI table 1:

- Refuse to provide a table 1 on the basis that it is simply an arbitrary selection of variables to show.

- Refuse to provide a table 1, and point to an interactive tool allowing readers to explore all cohort characteristics.

- Provide a table 1 that is generated by a to-be-developed tool for creating custom tables 1.

- Provide a table 1 that is standard across all our studies, with a predefined selection of variables (E.g. age, sex, some set of major comorbidities, some set of major drug classes, some set of disease scores)

Any thoughts?

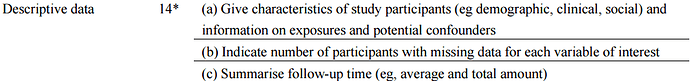

). However, as far as I can see STROBE is not very specific on what should go into table 1. Here’s what I could find for cohort studies:

). However, as far as I can see STROBE is not very specific on what should go into table 1. Here’s what I could find for cohort studies:

You only hear the few who complain. The ocean of happy followers and Martijn admirers - are silent.

You only hear the few who complain. The ocean of happy followers and Martijn admirers - are silent.