- Data presence should be reliable, and data absence should be reliable

Thank you @Christian_Reich for stating this so clearly. This is my issue with the desire to include “old” information from before the observation period. In particular, the absence of data is particularly hard to explain. People often want to include a few extra bits of information to get a few more people with an exposure of interest, forgetting that a lot of similar people are missing.

As an analogy, the issue is very much the same as with outcomes. Just as missing data in the outcomes period can be informative, data outside the observation period can be informative. This can bias outcomes associated with the exposure if the exposure definition period extends outside a contiguous observation period for some people.

Note that this is a different issue from forcing everyone to have the same duration of observation period before an index date (for example, forcing everyone to have a 12 month look-back period when some have valid, longer ones available). In this case, for people with different length observation periods, it is better to use all the information. See Gilbertson, et al. (I am ignoring the situation when applying a definition that requires a specific duration of observation period in which case you should use that.)

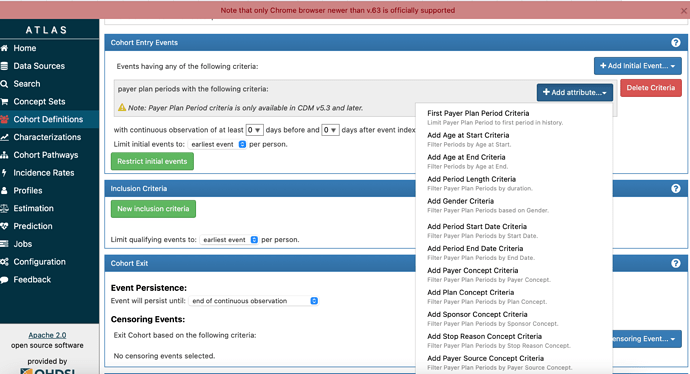

Observation periods can be constructed dynamically using the payer_plan_period, or through other modifications to the observation period table. We support that in our application and our data model, and we prefer that flexibility for our purposes. But I can also attest to the fact that dicing the data in this way can affect performance with large datasets. The OHDSI approach is to place information in the CDM that is ready to be analyzed and to consider analytical performance. This isn’t always explicitly stated with the OMOP CDM, so it is important that you clarified this. I think any change needs to be considered in the context of the breaking changes it would introduce.

If we know they are no longer enrolled but know they died in the future, we are missing a snippet of time that might explain what truly happened to the patient.

If we know they are no longer enrolled but know they died in the future, we are missing a snippet of time that might explain what truly happened to the patient.