Hello folks,

I have a list of drug names from raw source data.

For ex: I have more than 1000 unique drug names. However, the drug names has spelling mistakes. In addition, all these 1000 terms are after shortlisting based on frequency count.

For ex: we have ISONIAZID 300MG TAB , ISONAZID 300MG TAB, ISNIAZID 300MG

Through manual review, I found out that they all are same but just a spelling mistake (or space issues). But the problem is there are several other drugs with such spelling mistakes and I am not sure how can I group all of them into one (meaning rename it with right spelling)?

But is there anyway to correct this spelling mistake and map this to OMOP concepts efficiently.

Meaning, if I had like 200-300 I would have done it manually but since we have more than 1000, not sure how to get rid of this spelling mistakes in an efficient manner.

Or do I have to manually correct the spelling mistakes?

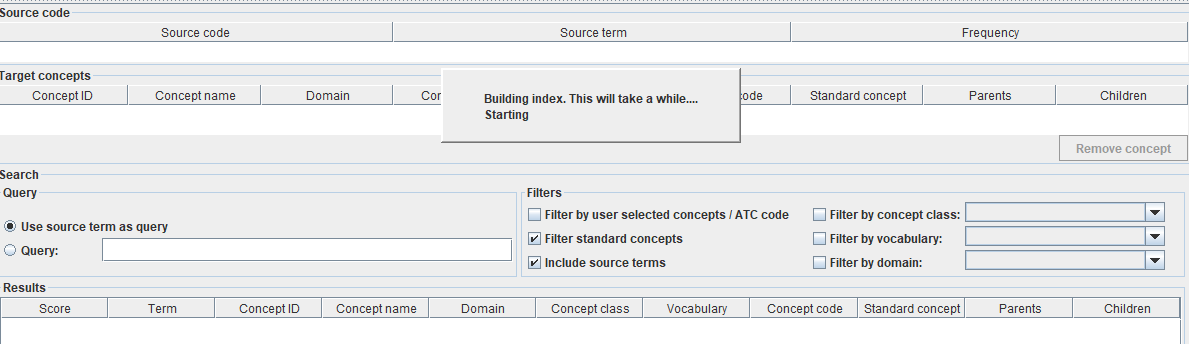

I tried Usagi as well. So, is it like

- I upload raw terms (which contains spelling mistakes) csv in Usagi

- Get the closest match

- And again review this 1K records and fix the outliers (which are mapped incorrectly)

How do you handle issues like this during your mapping phase? Any tips/approach that you follow is very helpful to know. I understand it may not be possible to achieve 100pc accuracy while mapping but learning from your experience will definitely be helpful